For context:

I’m copying the same files to the same USB drive for comparison from Windows and from my Fedora 41 Workstation.

Around 10k photos.

Windows PC: Dual Core AMD Athlon from 2009, 4GB RAM, old HDD, takes around 40min to copy the files to USB

Linux PC: 5800X3D, 64GB RAM, NVMe SSD, takes around 3h to copy the same files to the same USB stick

I’ve tried chagning from NTFS to exFAT but the same result. What can I do to improve this? It’s really annoying.

Random peripherals get tested against windows a lot more than Linux, and there are quirks which get worked around.

I would suggest an external SSD for any drive over 32GB. Flash drives are kind of junk in general, and the external SSDs have better controllers and thermals.

Out of curiosity, was the drive reformatted between runs, and was a Linux native FS tried on the flash drive?

The Linux native FS doesn’t help migrate the files between Windows and Linux, but it would be interesting to see exFAT or NTFS vs XFS/ext4/F2FS.

Do you have the same speeds when coping say a single 1gb file? A lot of small files introduces a bit more overhead. Rsync can help somewhat

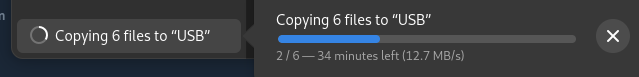

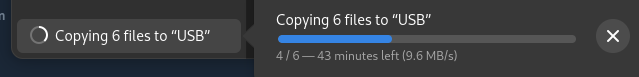

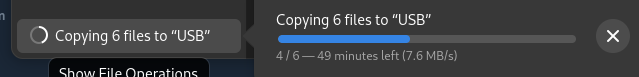

I’m copying 6 video files that are 40GB total and it’s been over 3h now and still not finished so it’s not just a lot of small files. It’s just slow as hell in general. Yes, the USB is 3.0 connected to 3.0 port verified it’s actually running at 3.0 bus. No, it’s not fault of the USB drive as this takes around 30min from USB 2.0 on Windows 10. Yes, I’ve tried Fat32, exFAT and NTFS… I couldn’t care less about ext4 for this particular use case so it’s not relevant and I haven’t tried it yet because I’m still stuck copying. Not sure what rsync does different, I just use standard CTRL+C/CTRL+V copy/paste that I expect to work flawlessly in 2025. No idea why I would want to use command line for copying files to USB drive. This seems like an ongoing problem for over 10 years from what I’ve been looking at trying to find solution, I found none that worked yet, just the same comments I’m getting here mostly.

Very strange… So it sounds like you’re using whatever the default file manager is for your desktop, there really isn’t any reason the filesystem type would make things that much slower. Something must be very different about your system to be slowing transfers down that much.

I would use iotop to see how much data is being written, where, and what speeds it’s getting but if you prefer a graphical version of that maybe “system monitor” is available to you in gnome or whatever desktop you use. You’ve probably already tested other drives I guess, maybe try just booting a fresh live USB of something and see if the problem persists there too.

Personally, i have never experienced problems while reading from USB sticks, but i have while writing. I have a 15+ years old USB2 stick and a new USB3.x stick. The USB2 stick writes with constant ~20MB/s, while USB3 is all over the place between 200MB/s and ~0.1MB/s. Unusable for me. For a while i used external HDDs and SSDs over USB3, as they somehow run without problems, but they are cumbersome and expensive.

Therefore i have switched to transfer files over the network (for large files i plug in Ethernet) using KDE connect. Unfortunately it can not send folders (yet), so i would .tar them before sending, and untar them after.

LocalSend would also be an option. Maybe that can do folders natively.

I’m aware of these programs but they are just a way around the problem and not a solution. Besides they have their own limitations… I can’t use KDE Connect because it does not work on my network because I run 3 routers in one network and would have to be connected to the same router which is not possible… Because of the reason why I need to run 3 routers

Silly question perhaps, but are you sure you’re using the correct port on your Linux system? If I plug my external HD into a USB2 port, I’m stuck at 30-40MB/sec, while on a USB3 port I get ~150-180MB/sec. That’s proportionally similar to the difference you described so I wonder if that’s the culprit.

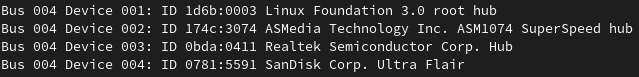

You can verify this in a few different ways. From Terminal, if you run

lsusbyou’ll see a list of all your USB hubs and devices.It should look something like this:

Bus 002 Device 001: ID xxxx:yyyy Linux Foundation 3.0 root hub Bus 002 Device 002: ID xxxx:yyyy <HDD device name> Bus 003 Device 001: ID xxxx:yyyy Linux Foundation 2.0 root hub Bus 004 Device 001: ID xxxx:yyyy Linux Foundation 3.0 root hubSo you can see three hubs, one of which is 2.0 and the other two are 3.0. The HDD is on bus 002, which we can see is a USB 3.0 hub by looking at the description of

Bus 002 Device 001. That’s good.If you see it on a 2.0 bus, or on a bus with many other devices on it, that’s bad and you should re-organize your USB devices so your low-speed peripherals (mouse, keyboard, etc.) are on a USB2 bus and only high-speed devices are on the USB3 bus.

You can also consult your motherboard’s manual, or just look at the colors of your USB ports. By convention, gray ports are USB 1.0, blue ports are 2.0, and green ports are 3.x.

If you’re running KDE, you can also view these details in the GUI with kinfocenter. Not sure what the Gnome equivalent is.

For sanity check I’ve tried.

Bus 004 Device 004and it’s USB 3.0 as it should be.Also I’ve disabled caching and I’m now copying 6 video files at only just 15MB/s (and it’s slowing down, byt the time I went to make screenshot for this post it dropped again). And it’s quite a bit slower than on Windows still.

Now down to 9MB/s and still going down

EDIT: 12 min later…

Still going…

it just finished, 4h for 40GB (6 files)

Did the USB drive get excessively warm during this because it looks like the drive is throttling?

Incidentally, this is why I switched to using external SSDs. A group of 128GB flash drives I had would slowly fall over when I would write 100GB off files to it.

This is a really good point. I generally have the opposite experience re: Linux vs windows file handling speed. But I have been throttled before by heat.

OP, start again tomorrow and try the reverse, and tell us the results.

I’m using USB 3 10Gbps on my Linux system. The USB stick is USB 3-1.0 and the Windows PC only has USB 2.0 so it should be the slowest but it’s actually several times faster.

I find that it’s around the same, except linux waits on updating the UI until all write buffers are flushed, whereas Windows does not.

except linux waits on updating the UI until all write buffers are flushed, whereas Windows does not.

I wish that were true here. But when I copy to USB the file manager ( XFCE/Thunar ) shows the copy is finished and closes the copy notifications way way before it’s even half done, when I copy movies to a stick.

I use fast USB 3 stick on USB 3 port, and I don’t get anywhere near the write speed the stick manufacturer claims. So I always open a terminal and run sync, to see when it’s actually finished.I hate to the extreme when systems don’t account for write cache before claiming a copy is finished, it’s such an ancient problem we’ve had since the 90’s, and I find it embarrassing that such problems still exist on modern systems.

I’ve ran sync and it exited already 4 times and the copy is still going

Yes that’s annoying too, I have no clue why it does that, but when the sync says “clear”, I always wait a couple seconds, and run sync again a couple of times, to see if it’s actually finished. And only THEN unmount the stick.

Copy to USB does not seem very solid on Linux IMO. So I also ALWAYS buy sticks with activity LED.

But even that can fool you, sometimes when I think a smaller copy is finished, because the LED stops blinking, it suddenly starts up again, after having paused for about 1½ second?!?!

That’s nice but I managed to copy 300GB worth of data from the Windows PC to my Linux PC in around 3h to make a backup while I reinstall system and now I’ve been stuck for half a day copying the data back to the old Windows PC and I’ve not even finished 100GB yet… I’ve noticed this issue long ago but I ignored it as I never really had to copy this much data. Now it’s just infuriating.

One thing I ran into, though it was a while ago, was that disk caching being on would trash performance for writes on removable media for me.

The issue ended up being that the kernel would keep flushing the cache to disk, and while it was doing that none of your transfers are happening. So, it’d end up doubling or more the copy time because the write cache wasn’t actually helping removable drives.

It might be worth remounting without any caching, if it’s on, and seeing if that fixes the mess.

But, as I said, this has been a few years, so that may no longer be actively the case.

This actually sounds like it could be the case, I’ll explore tomorrow as I’m already in bed. Thanks for suggestion.

Edit: disabling caching yielded an improvement but very minor, writing to USB stick still sucks

Are you using two separate devices? If so another option could be LocalSend, it allows you to send files over the same network.

I used it for sending a couple hundred GBs of files. Didn’t take too too long. Also avoids unnecessary writes to flash media.

rsync -aP <source>/ <dest>

I find it faster and more reliable than most GUI explorers

-avPI do

-azPfor compressioncompression is good when copying over the network, but would just waste cpu time when copying to a usb stick.

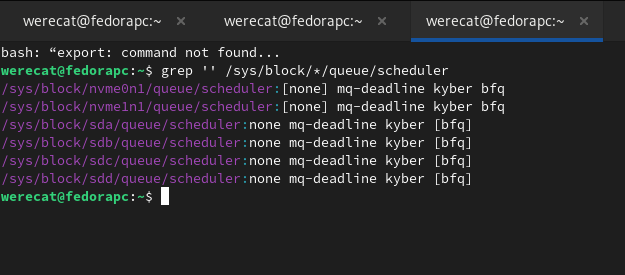

I/O scheduler issue?

Connect that USB stick, and run command:

grep '' /sys/block/*/queue/schedulershow output.

Can I run it while transferring?

Sure!

I see nothing wrong here. So issue must be somewhere else.

That’s just the state of things. I have experienced this as well, trying to copy a 160 GB usb stick to another one (my old itunes library). Windows manages fine, but neither Linux nor MacOS do it properly. They crawl, and in macos’ case, it gets much slower as time goes by, and I had to stop the transfer. Overall, it’s how these things are implemented. It’s ok for a few gigabytes, but not a good case for many small files (e.g. 3-5 mb each) with many subfolders, and many GBs overall. Seems to me that some cache is overfilling, while windows is more diligent to clear up that cache in time, before things get into a crawl. Just a weak implementation for both Linux and MacOS IMHO, and while I’m a full time Linux user, I’m not afraid to say it how I experienced it under a debian and ubuntu.

How are you copying them? It could potentially be faster to use rsync. You can try mounting with noatime as a flag as well.

noatime

Oh boy, yes I remember I used to disable that shit ages ago in fstab, that’s quite annoying that’s still necessary!!!

How do you do that for USB sticks?I’m pretty sure you can just mount it with mount and specify noatime

Ah yes of course, I just haven’t used manual mount for almost a decade. So I completely forgot we used to do that. I have to unmount it first though, because USB sticks are automatically mounted.

Ctrl c, ctrl v