So, I’ve drafted two replies to this so far and this is the third.

I tried addressing your questions individually at first, but if I connect the dots, I think you’re really asking something like:

Is there a comprehensive mental model for how to think about this stuff? One that allows us to have technical progress, and also take care of people who work hard to do cool stuff that we find valuable? And most importantly, can’t be twisted to be used against the very people it’s supposed to protect?

I think the answer is either “no”, or maybe “yes – with an asterisk” depending on how you feel about the following argument…

Comprehensive legal frameworks based on rules for proper behavior are fertile ground for big tech exploitation.

As soon as you create objective guidelines for what constitutes employment vs. contracting, you get the gig economy with Uber and DoorDash reaping as many of the boss-side benefits of an employment relationship as possible while still keeping their tippy-toes just outside the border of legally crossing into IRS employee-misclassification territory.

A preponderance of landlords directly messaging each other with proposed rent increases is obviously conspiracy to manipulate the market. If they all just happen to subscribe to RealPage’s algorithmic rent recommendation services and it somehow produces the same external effect, that should shield you from antitrust accusations – for a while at least – right?

The DMCA provisions against tampering with DRM mechanisms was supposed to just discourage removing copyright protections. But instead it’s become a way to limit otherwise legitimate functionality, because to work around the artificial limitation would incidentally require violating the DMCA. And thus, subscriptions for heated seats are born.

This is how you end up with copyright – a legal concept originally aimed at keeping publishers well-behaved – being used against consumers in the first place. When copyright was invented, you needed to invest some serious capital before the concept of “copying” as a daily activity even entered your mind. But computers make an endless amount of copies as a matter of their basic operation.

So I don’t think that we can solve any of this (the new problems or the old ones) with a sweeping, rule-based mechanism, as much as my programmer brain wants to invent one.

That’s the “no”.

The “yes – with an asterisk” is that maybe we don’t need to use rules.

Maybe we just establish ways in which harms may be perpetrated, and we rely on judges and juries to determine whether the conduct was harmful or not.

Case law tends to come up with objective tests along the way, but crucially those decisions can be reviewed as the surrounding context evolves. You can’t build an exploitative business based on the exact wording of a previous court decision and say “but you said it was legal” when the standard is to not harm, not to obey a specific rule.

So that’s basically my pitch.

Don’t play the game of “steal vs. fair use”, cuz it’s a trap and you’re screwed with either answer.

Don’t codify fair play, because the whole game that big tech is playing is “please define proper behavior so that I can use a fractal space-filling curve right up against that border and be as exploitative as possible while ensuring any rule change will deal collateral damage to stuff you care about”.

–

Okay real quick, the specifics:

How to guarantee authors are compensated for their works?

Allow them serious weaponry, and don’t allow industry players to become juggernauts. Support labor rights wherever you can, and support ruthless antitrust enforcement.

I don’t think data-dignity/data-dividend is the answer, cuz it’s playing right into that “rules are fertile ground for exploitation” dynamic. I’m in favor of UBI, but I don’t think it’s a complete answer, especially to this.

Any one person can learn about the style of an author and imitate it without really copying the same piece of art. That person cannot be sued for stealing “style”, and it feels like the LLM is basically in the same area of creating content.

First of all, it’s not. Anthropomorphizing large-scale statistical output is a dangerous thing. It is not “learning” the artist’s style any more than an MP3 encoder “learns” how to play a song by being able to reproduce it with sine waves.

(For now, this is an objective fact: AIs are not “learning”, in any sense at all close to what people do. At some point, this may enter into the realm of the mind-body problem. As a neutral monist, I have a philosophical escape hatch when we get there. And I agree with Searle when it comes to the physicalists – maybe we should just pinch them to remind them they’re conscious.)

But more importantly: laws are for people. They’re about what behavior we endorse as acceptable in a healthy society. If we had a bunch of physically-augmented cyborgs really committed to churning out duplicative work to the point where our culture basically ground to a halt, maybe we would review that assumption that people can’t be sued for stealing a style.

More likely, we’d take it in stride as another step in the self-criticizing tradition of art history. In the end, those cyborgs could be doing anything at all with their time, and the fact that they chose to spend it duplicating art instead of something else at least adds something interesting to the cultural conversation, in kind of a Warhol way.

Server processes are a little bit different, in that there’s not really a personal opportunity cost there. So even the cyborg analogy doesn’t quite match up.

How can we differentiate between a synthetic voice trained with thousand of voices but not the voice of person A but creates a voice similar to that of A against the case of a company “stealing” the voice of A directly?

Yeah, the Her/Scarlett Johansson situation? I don’t think there really is (or should be) a legal issue there, but it’s pretty gross to try to say-without-saying that this is her voice.

Obviously, they were trying to be really coy about it in this case, without considering that their technology’s main selling point is that it fuzzes things to the point where you can’t tell if they’re lying about using her voice directly or not. I think that’s where they got more flak than if they were any other company doing the same thing.

But skipping over that, supposing that their soundalike and the speech synthesis process were really convincing (they weren’t), would it be a problem that they tried to capitalize on Scarlett/Her without permission? At a basic level, I don’t think so. They’re allowed to play around with culture the same as anyone else, and it’s not like they were trying to undermine her ability to sell her voice going forward.

Where it gets weird is if you’re able to have it read you erotic fan faction as Scarlett in a convincing version of her voice, or use it to try to convince her family that she’s been kidnapped. I think that gets into some privacy rights, which are totally different from economics, and that could probably be a whole nother essay.

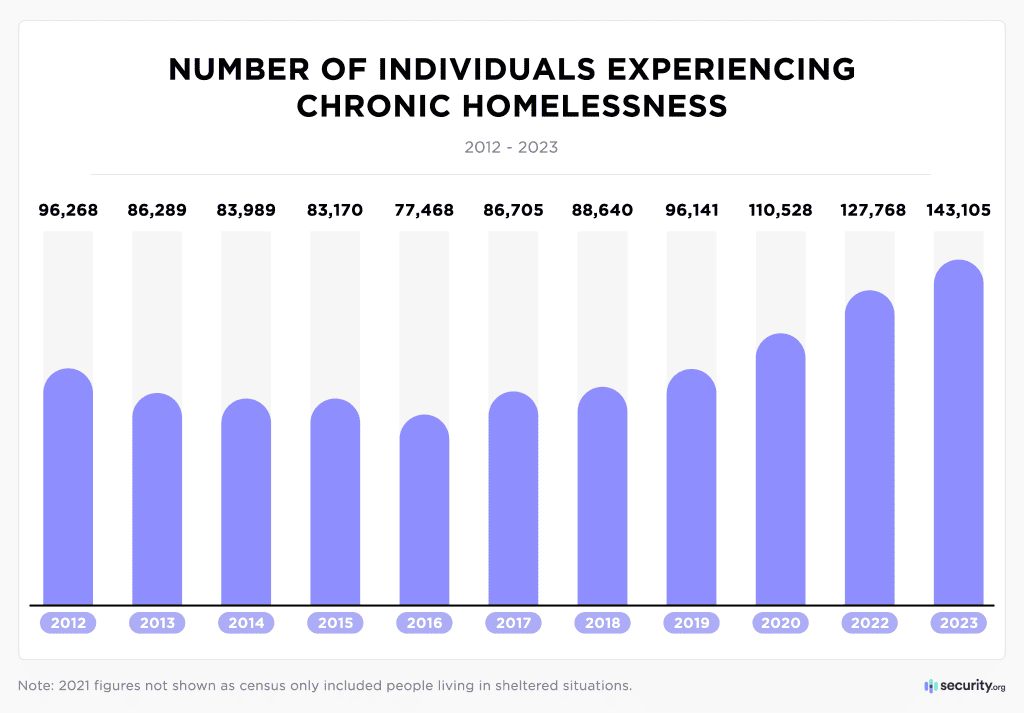

Economy’s doing great though apparently.